A little while back, I saw this tweet from @hugo_le_moine_, detailing how he is using the backup feature from his podcast app to display listening data on a Streamlit site. Since he happens to use Podcast Addict, which is the same app that I’ve been using since 2019, I thought it would be fun to try building something similar in the stack(s) that I’m most familiar with. At the time, I had no idea what I was really getting myself into.

My plan for this article is to outline how I built this process from end-to-end, including the pitfalls I ran into along the way. If you have any questions or comments while reading, please feel free to drop a comment below, or send me an email with the link at the bottom of the page.

Before I go any further, here is a link to the final product.

Overview

The primary goal for this project was to automate a process to display my podcast listening stats at podcaststats.andrewpetz.com. The process would be as follows:

- Podcast Addict creates a scheduled automatic backup. In my case, this is every night at 3am. This backup is a file with a

.backupextension, which can be changed to.zipand unzipped to reveal apodcastAddict.dbSQLite database file. - Once a week (Sunday morning at 2am) my phone will sync the Podcast Addict backup folder with a folder in my Google Drive. This is done using the Android app FolderSync, which has a great built-in Google Drive integration.

- Assuming that the backup works correctly, I will run an Azure Function once a week (Sunday morning at 3am) to update a static website.

- The static website displays the listening data using Chart.js.

Setting up Podcast Addict

Podcast Addict has an automatic backup setting, under App Settings -> Backup / Restore. I created a new folder on my phone and specified that folder as the default backup location. I also set a backup interval of every 24 hours and a time of 3am. All of this means that every morning at 3am, Podcast Addict creates a new backup file in the folder that I specified in the settings.

FolderSync

To setup FolderSync, I first connected my Google Drive account. I created a new folder within my Google Drive at Backups/Podcasts to store my backups from PodcastAddict, then created a new folder pair in FolderSync that connects the local folder with the Google Drive folder. This folder pair uses the following settings:

- Sync type: to remote folder

- Remote folder:

/Backups/Podcasts - Local folder:

/storage/emulated/0/Backups/PodcastAddict - Scheduled sync: enabled

- Sync interval: Sunday at 2am

I think all the other options that I used are defaults, but to be honest I set this up a while ago and I don’t remember all of the steps I had to take.

The Azure Function

Ok, let’s get into the meat and potatoes of this project. So far, all we’ve really done is get an automatic backup uploaded to Google Drive every Sunday. Now what can we do with that backup file?

Since I’m a .NET developer, I decided to run this scheduled task to update my static site using an Azure Function. This allows me to create a program that is automatically scheduled to run at certain intervals on Microsoft Azure, and I can write it in my favorite language, C#.

Here’s a quick overview of what exactly this scheduled task does. This is far from my original ideas, but it’s what I ended up with.

- Connect to Google Drive, download the

podcastAddict.dbbackup file, and unzip it. - Using the

Microsoft.Data.Sqlitelibrary, open the database file and extract the data that I need into twoList<T>objects - aList<Podcast>and aList<Episode>. I’ll explain these objects later on. - Use these lists to upload podcast thumbnails to Azure Blob Storage for display on the site.

- Use these lists to create more lists, each of which corresponds to a set of data to display on the site.

- Create CSV files from the data lists using the CsvHelper Nuget package.

- Upload these CSV files to Azure Blob Storage.

- Purge the Bunny CDN pullzone cache using their API.

Now let’s go through these steps, one at a time.

Connect to Google Drive

First, I created a new Google Cloud project. In my case, I’m calling it Podcast Stats. I opted to use a service account to access my Drive, so I also created a new service account under this project. Once you have your service account created, make sure to download your credentials in json format. This file should look something like:

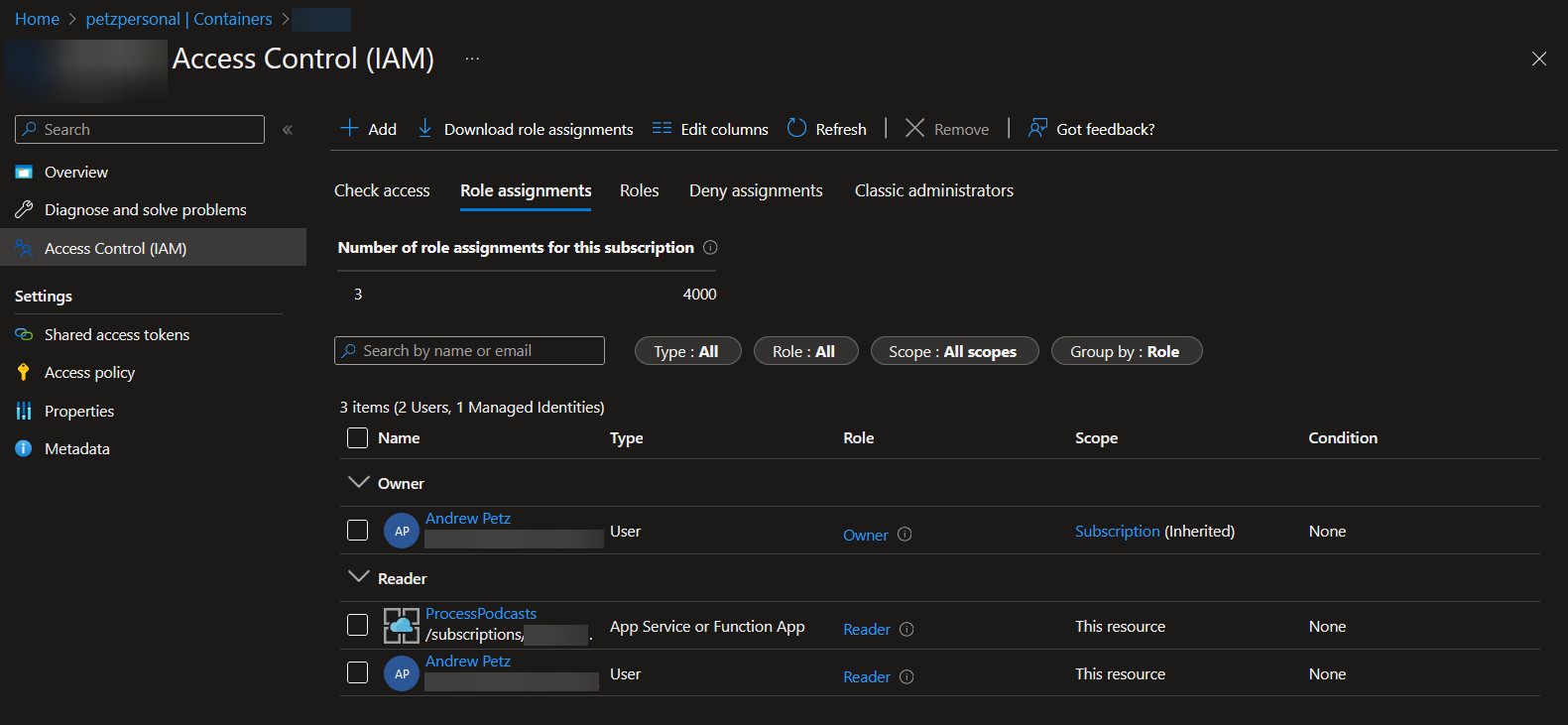

{ "type": "service_account", "project_id": "podcast-stats", "private_key_id": "xxx", "private_key": "-----BEGIN PRIVATE KEY-----\nxxx\n-----END PRIVATE KEY-----\n", "client_id": "xxxxxxxxxxxxxxxxxxxxx", "auth_uri": "https://accounts.google.com/o/oauth2/auth", "token_uri": "https://oauth2.googleapis.com/token", "auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs", "client_x509_cert_url": "https://www.googleapis.com/robot/v1/metadata/x509/xxx.iam.gserviceaccount.com"}Once we have the file, we need a way for the Azure Function to access it. I tried just including it with the publish, but I had some issues with that approach, so I ended up using Azure Blob Storage instead. I setup a new container in the same storage account that I’m using for the CSV files, but only allowing read permissions for the Azure Functions app user as well as myself. This prevents others from accessing the file, but allows the Azure Function to access it:

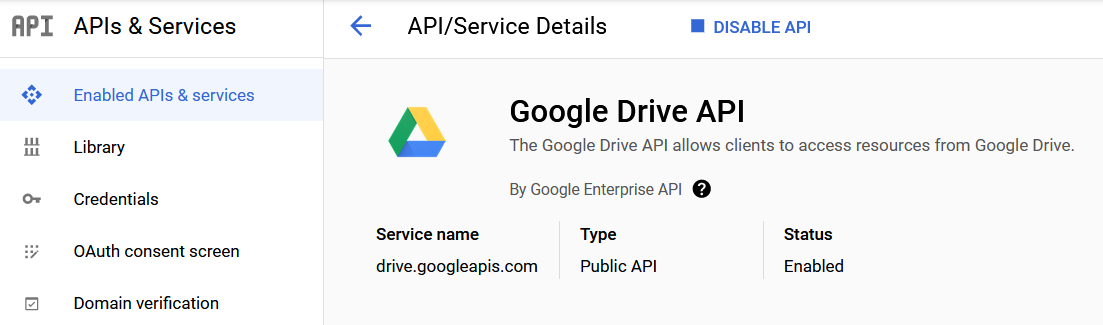

Next make sure to enable Drive in the Enabled APIs & services section of the Google Cloud console:

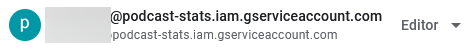

And finally, I found that I had to manually share the folder with the service account, as well as allow anyone with the link to view the files. I don’t feel like this is how it’s supposed to be done, but here we are.

Once I had Drive properly setup, I had to use the Google Drive Nuget package to authenticate and download the database file. Here’s the function I wrote:

/// <summary>/// Downloads the latest podcastAddict.db file from Google Drive, unzips it, and saves to a local file./// </summary>/// <returns>The full path of the downloaded file.</returns> public async Task<string> DownloadDbFromDrive(ILogger log){ var Scopes = new[] { DriveService.Scope.DriveReadonly }; var ApplicationName = "Podcast Stats"; GoogleCredential credential; // Load client secrets. // get the credentials.json file from Azure Blob Storage using (var httpClient = new HttpClient()) { var appSettings = new AppSettings(); var credentialsJson = await AzureBlobStorage.GetCredentialsFileString(log); using var stream = new MemoryStream(System.Text.Encoding.UTF8.GetBytes(credentialsJson)); var serviceAccountCredential = ServiceAccountCredential.FromServiceAccountData(stream); credential = GoogleCredential.FromServiceAccountCredential(serviceAccountCredential).CreateScoped(Scopes); /* The file token.json stores the user's access and refresh tokens, and is created automatically when the authorization flow completes for the first time. */ var credPath = "token.json"; log.LogInformation("Credential file saved to: " + credPath); } // Create Drive API service. var service = new DriveService(new BaseClientService.Initializer { HttpClientInitializer = credential, ApplicationName = ApplicationName }); // this will get the latest backup file from Drive var listRequest = service.Files.List(); listRequest.Q = "name contains 'PodcastAddict'"; listRequest.PageSize = 1; listRequest.Fields = "nextPageToken, files(id, name, createdTime, webContentLink)"; listRequest.OrderBy = "createdTime"; // list the files var files = (await listRequest.ExecuteAsync()).Files; files = files.OrderByDescending(x => x.CreatedTime).ToList(); if (!files.Any()) { log.LogInformation("Unable to retrieve backup file from Google Drive. No files found for query."); } var downloader = new MediaDownloader(service); // download the file and unzip it var fileUrl = files.First().WebContentLink; var memoryStream = new MemoryStream(); await downloader.DownloadAsync(fileUrl, memoryStream); memoryStream.Position = 0; var archive = new ZipArchive(memoryStream, ZipArchiveMode.Read, false); var filepath = Path.Combine(Path.GetTempPath(), Guid.NewGuid().ToString()); var fullPath = $"{filepath}\\podcastAddict.db"; var dbFile = archive.Entries.First(x => x.FullName == "podcastAddict.db").Open(); // create the temp podcastAddict.db file and write the dbFile stream to it // make sure the directory exists Directory.CreateDirectory(filepath); var fs = File.Create(fullPath); await dbFile.CopyToAsync(fs); fs.Close(); return fullPath;}Well that sure is a lot of code. Let’s go through it!

First, we need to connect the application to Google Drive. That is done in the following block, which:

- Sets the scope of the permissions that we require. In this case we only care about reading files, so we set the scope to

DriveService.Scope.DriveReadonly. - Creates a service credential file (

token.json) by reading ourcredentials.jsonfile from Azure Blob Storage and saves it to the local drive. - Using the credentials from the previous step, creates a new

DriveServiceobject that we can use to access our Drive files in the next step(s).

var Scopes = new[] { DriveService.Scope.DriveReadonly };var ApplicationName = "Podcast Stats";GoogleCredential credential; // Load client secrets.// get the credentials.json file from Azure Blob Storageusing (var httpClient = new HttpClient()){ var appSettings = new AppSettings(); var credentialsJson = await AzureBlobStorage.GetCredentialsFileString(log); using var stream = new MemoryStream(System.Text.Encoding.UTF8.GetBytes(credentialsJson)); var serviceAccountCredential = ServiceAccountCredential.FromServiceAccountData(stream); credential = GoogleCredential.FromServiceAccountCredential(serviceAccountCredential).CreateScoped(Scopes); /* The file token.json stores the user's access and refresh tokens, and is created automatically when the authorization flow completes for the first time. */ var credPath = "token.json"; log.LogInformation("Credential file saved to: " + credPath);} // Create Drive API service.var service = new DriveService(new BaseClientService.Initializer{ HttpClientInitializer = credential, ApplicationName = ApplicationName});You may have noticed the line var credentialsJson = await AzureBlobStorage.GetCredentialsFileString(log);. Here’s the GetCredentialsFileString() function:

public static async Task<string> GetCredentialsFileString(ILogger log){ try { var azureConnectionString = (await new AppSettings().AzureBlobStorageConnectionString()).Value; var blobServiceClient = new BlobServiceClient(azureConnectionString); var containerName = await new AppSettings().SecretBlobContainerName(); var blobContainerClient = blobServiceClient.GetBlobContainerClient(containerName.Value); var blobClient = blobContainerClient.GetBlobClient("credentials.json"); var downloadInfo = await blobClient.DownloadAsync(); var streamReader = new StreamReader(downloadInfo.Value.Content); return await streamReader.ReadToEndAsync(); } catch (Exception ex) { log.LogError($"Unable to download blob file - {ex.Message}"); return null; }}Now we have an object that we can use for accessing our Drive files, so the next step is to make use of it.

In order to do so, we can create a new FilesResource.ListRequest object, which is the Google Drive Nuget package’s way of encapsulating a request to retrieve a list of files from Drive. This class has a number of properties, but we only care about Q, PageSize, Fields, and OrderBy. These fields are pretty self-explanatory, other than Q (at least for me), but it’s just the name for the query parameter.

Once the listRequest is created, we call (await listRequest.ExecuteAsync()).Files to get the list of files returned by our query. This should return the latest backup file Drive with a name that contains PodcastAddict.

// this will get the latest backup file from Drivevar listRequest = service.Files.List();listRequest.Q = "name contains 'PodcastAddict'";listRequest.PageSize = 1;listRequest.Fields = "nextPageToken, files(id, name, createdTime, webContentLink)";listRequest.OrderBy = "createdTime"; // list the filesvar files = (await listRequest.ExecuteAsync()).Files;files = files.OrderByDescending(x => x.CreatedTime).ToList();if (!files.Any()){ log.LogInformation("Unable to retrieve backup file from Google Drive. No files found for query.");}Finally, we download the returned file, unzip it, and save it to the local machine. The Google Drive API contains a MediaDownloader class, which we can use to get a MemoryStream of our .backup file. Once we have that, we use a ZipArchive object to open the podcastAddict.db file inside the archive and save it. When that’s all done, this function returns the full path of the downloaded file, which we can use in the next step.

var downloader = new MediaDownloader(service); // download the file and unzip itvar fileUrl = files.First().WebContentLink;var memoryStream = new MemoryStream();await downloader.DownloadAsync(fileUrl, memoryStream);memoryStream.Position = 0; var archive = new ZipArchive(memoryStream, ZipArchiveMode.Read, false); var filepath = Path.Combine(Path.GetTempPath(), Guid.NewGuid().ToString());var fullPath = $"{filepath}\\podcastAddict.db";var dbFile = archive.Entries.First(x => x.FullName == "podcastAddict.db").Open(); // create the temp podcastAddict.db file and write the dbFile stream to it// make sure the directory existsDirectory.CreateDirectory(filepath);var fs = File.Create(fullPath);await dbFile.CopyToAsync(fs);fs.Close(); return fullPath;Read the SQLite File

Now that we have the full path of the downloaded SQLite .db file, we can use the Microsoft.Data.Sqlite library to read the contents of the database. In order to write this query I had to do a lot of digging to know where to get the data I needed - this can be done with the great tool DB Browser for SQLite.

You might notice a couple lines with MiniProfiler; ignore those for the purposes of this post, I’ll be writing a separate one on how to use that in the future.

First we open a new SqliteConnection with the Data Source that is our full file path from the previous step. Then we create a new command and write a SQL query to run against the connection object. You’ll see a comment with a number next to each column in the query - these correspond to the index of the column, which is what’s used to retrieve the data in the next step. Calling ExecuteReaderAsync() returns a SqliteDataReader, which is the object that contains all the data we will be using.

var fullPath = await new Utilities().DownloadDbFromDrive(log); var profiler = MiniProfiler.StartNew("Sandbox Profiler"); profiler.Step("Open SQLite connection and run query");await using var connection = new SqliteConnection($"Data Source={fullPath}");await connection.OpenAsync();var command = connection.CreateCommand();command.CommandText = @" SELECT episodes.podcast_id, -- 0 podcasts.name AS podcastName, -- 1 episodes.name AS episodeName, -- 2 episodes.duration_ms, -- 3 episodes.playbackDate, -- 4 bitmaps.url AS thumbnailUrl, -- 5 podcasts.feed_url, -- 6 podcasts.category, -- 7 tags.name, -- 8 podcasts.homepage -- 9 FROM episodes INNER JOIN podcasts ON podcasts._id = episodes.podcast_id LEFT JOIN bitmaps ON bitmaps._id = episodes.thumbnail_id LEFT JOIN tag_relation ON tag_relation.podcast_id = podcasts._id LEFT JOIN tags ON tags._id = tag_relation.tag_id WHERE episodes.seen_status == 1 AND episodes.duration != - 1 AND episodes.playbackDate != - 1 "; await using var reader = await command.ExecuteReaderAsync();Process the Data

For this section, I’m not going to share very much code. There’s a lot going on, it’s messy, and I’m not sure how productive it is for a blog post to be 3/4 code.

The general idea is that we create two List objects, one of Podcast and one of PodcastEpisode, loop through the results of the query, and use those results to fill the lists. Once the lists are populated, we use them to create lists of other objects that translate to the data we’re going to use for the static site.

In the while loop below, the calls to reader.Get{Type}(int) correspond to the indices from the query above. We are looping through the rows of the query results and assigning the value of each column to a new variable. Since the duration and date columns are in milliseconds, we use the special helpers in the DateTime and TimeSpan objects to get the correct values.

var podcasts = new List<Podcast>();var episodes = new List<PodcastEpisode>(); while (await reader.ReadAsync()){ var podcastId = reader.GetInt32(0); var podcastName = reader.GetString(1); var episodeName = reader.GetString(2); var episodeDuration = TimeSpan.FromMilliseconds(reader.GetInt64(3)); var playbackDate = DateTimeOffset.FromUnixTimeMilliseconds(reader.GetInt64(4)).DateTime; var thumbnailUrl = await reader.IsDBNullAsync(5) ? null : reader.GetString(5); var feedUrl = reader.GetString(6); var categoryString = await reader.IsDBNullAsync(7) ? null : reader.GetString(7); var homepage = await reader.IsDBNullAsync(9) ? null : reader.GetString(9);}Before we go any further, here are the class definitions of Podcast and PodcastEpisode:

public class Podcast{ public int Id { get; set; } public string Name { get; set; } public string Categories { get; set; } public string FeedUrl { get; set; } public string ThumbnailUrl { get; set; } public string Homepage { get; set; } /// <summary> /// The name of the image on the server. Example: <code>2341.jpg</code> or <code>42.png</code> /// </summary> public string ImageFileName { get; set; }}public class PodcastEpisode{ public int PodcastId { get; set; } public string EpisodeName { get; set; } public TimeSpan Duration { get; set; } public DateTime PlaybackDate { get; set; } public string ImageUrl { get; set; }}By far the most difficult part about the initial data load is getting the proper thumbnails. For most podcasts in the database, the URL of the thumbnail isn’t actually stored - instead, the bitmaps.url column points to a local file that I’m assuming is downloaded by Podcast Addict when the podcast is first subscribed to. To get around this, I had to write some custom logic that loads the XML feed of the podcast and checks if there is an image there first. If not, I check the bitmap url from the database, and if that is a string pointing to a local file, I ignore the image. Using this workflow I was able to get the thumbnails for all but six of my podcasts, for which I manually downloaded the thumbnails and uploaded to Azure Blob Storage. Three of those six are from Patreon, and the thumbnail links in those feeds didn’t have a file extension, which makes them difficult to download correctly - so I just ignore those.

var newPodcast = new Podcast{ Id = podcastId, Name = podcastName, Categories = categoryString, FeedUrl = feedUrl, Homepage = homepage}; // TODO: handle Patreon images - they don't have the normal file extensionsusing var xmlReader = XmlReader.Create(feedUrl);var feed = SyndicationFeed.Load(xmlReader);var imageUrl = feed.ImageUrl?.ToString();if (!string.IsNullOrWhiteSpace(imageUrl)){ if (imageUrl.Contains('?')) { imageUrl = imageUrl.Remove(imageUrl.IndexOf('?')); } var fileExtension = Path.GetExtension(imageUrl); if (fileExtension.Equals(".jpg", StringComparison.OrdinalIgnoreCase) || fileExtension.Equals(".jpeg", StringComparison.OrdinalIgnoreCase)) { newPodcast.ImageFileName = $"{newPodcast.Id}.jpg"; } else if (fileExtension.Equals(".png", StringComparison.OrdinalIgnoreCase)) { newPodcast.ImageFileName = $"{newPodcast.Id}.png"; } newPodcast.ThumbnailUrl = imageUrl;}else if (!string.IsNullOrWhiteSpace(thumbnailUrl) && !thumbnailUrl.StartsWith("/storage")){ if (thumbnailUrl.Contains('?')) { thumbnailUrl = thumbnailUrl.Remove(thumbnailUrl.IndexOf('?')); } var fileExtension = Path.GetExtension(thumbnailUrl); if (fileExtension.Equals(".jpg", StringComparison.OrdinalIgnoreCase) || fileExtension.Equals(".jpeg", StringComparison.OrdinalIgnoreCase)) { newPodcast.ImageFileName = $"{newPodcast.Id}.jpg"; } else if (fileExtension.Equals(".png", StringComparison.OrdinalIgnoreCase)) { newPodcast.ImageFileName = $"{newPodcast.Id}.png"; } newPodcast.ThumbnailUrl = thumbnailUrl;}else{ log.LogWarning($"Unable to find image for podcast {newPodcast.Id} - {newPodcast.Name}");} podcasts.Add(newPodcast); var episode = new PodcastEpisode{ PodcastId = podcastId, EpisodeName = episodeName, Duration = episodeDuration, PlaybackDate = playbackDate}; episodes.Add(episode);Upload Thumbnails to Azure Blob Storage

Since I decided to use Bunny CDN on top of Azure Blob Storage to store my CSV files, I decided to use the same tools for the thumbnail images that are displayed on the site. The interesting thing about the image upload is that I only do it once every four months or so, on the last run of the month. This should help save some resources (however insignificant), and the thumbnails shouldn’t change much if at all over time, so there’s no reason to re-upload them all every week. Note that the ImageFileUpload object just contains the image url and the name of the file, which is {id}.{extension}.

profiler.Step("Upload thumbnails to Azure");// upload thumbnail images to Azure Blob Storage// only do this every four months or so// to accomplish this, check if the current month modulo 4 == 0 (will only trigger in April, August, and December)// then, check if the current day of the month is within a week of the last day of the month// this should prevent running this each time we run the Azure Function var now = DateTime.Now;if (now.Month % 4 == 0 && DateTime.DaysInMonth(now.Year, now.Month) - now.Day <= 7){ var imageQueue = new List<ImageFileUpload>(); foreach (var p in podcasts) { if (!string.IsNullOrWhiteSpace(p.ThumbnailUrl)) { imageQueue.Add(new ImageFileUpload { ImageUrl = p.ThumbnailUrl, Filename = p.ImageFileName }); } else { log.LogWarning($"Podcast {p.Name} does not have a valid thumbnail url"); } } log.LogInformation("Queueing up image uploads"); await AzureBlobStorage.UploadImageFileList(imageQueue);}I also used the Azure Blob Storage Queue object to parallelize the uploads (for the CSV files as well as the images). I used this article’s code as a starting point. Once I implemented the queue, the entire process from start to finish went from taking about five minutes to taking one minute.

For each image in the list, this code grabs the url, gets the Stream object from that url, and then adds the blobClient.UploadAsync() Task object to the queue. Once all images are in the queue, it just awaits the task list with Task.WhenAll(). This same general idea is used when uploading the CSV files later in the process.

public static async Task UploadImageFileList(List<ImageFileUpload> images){ try { var tasks = new Queue<Task<Response<BlobContentInfo>>>(); var azureConnectionString = (await new AppSettings().AzureBlobStorageConnectionString()).Value; var blobServiceClient = new BlobServiceClient(azureConnectionString); var blobContainerClient = blobServiceClient.GetBlobContainerClient(AppSettings.BlobContainerName); // loop through images that are NOT from patreon // have to handle that in a special way, but I don't yet know how that's going to be foreach (var image in images.Where(x => !x.ImageUrl.Contains("patreon", StringComparison.OrdinalIgnoreCase))) { var imageUrl = image.ImageUrl; var fileName = image.Filename; var webClient = new HttpClient(); //webClient.DefaultRequestHeaders.Add("user-agent", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/51.0.2704.103 Safari/537.36"); var stream = await webClient.GetStreamAsync(imageUrl); var fileExtension = Path.GetExtension(imageUrl); var contentType = ""; if (fileExtension.Equals(".jpg", StringComparison.OrdinalIgnoreCase) || fileExtension.Equals(".jpeg", StringComparison.OrdinalIgnoreCase)) { contentType = "image/jpg"; } else if (fileExtension.Equals(".png", StringComparison.OrdinalIgnoreCase)) { contentType = "image/png"; } var blobClient = blobContainerClient.GetBlobClient(fileName); var blobHttpHeader = new BlobHttpHeaders { ContentType = contentType }; Console.WriteLine($"Uploading {image.Filename}"); tasks.Enqueue(blobClient.UploadAsync(stream, new BlobUploadOptions { HttpHeaders = blobHttpHeader })); } await Task.WhenAll(tasks); } catch (Exception ex) { Console.ForegroundColor = ConsoleColor.Red; Console.WriteLine($"Unable to download or upload image - {ex.Message}"); Console.ResetColor(); }}Create CSV Files from Data

Because of the length of this section of the code, I’m not going to share all of it. I used the fantastic CsvHelper Nuget package, which allows you to automatically generate CSV files based on objects of type List<T>. For each CSV file that I generate, I need a class to represent that data.

Monthly Hours

The number of hours listened per month, starting at the beginning of my Podcast Addict listening history. I also stored the number of episodes for each month so I could get the hours per episode and number of episodes per month.

public class MonthlyStats{ public string Name { get; set; } public double Hours { get; set; } public int Episodes { get; set; }}Total Time Listened

The total amount of time produced by adding together the runtime of every podcast episode I’ve ever listened to. Duration is stored in milliseconds, so I used the TimeSpan.FromTicks() helper to get a TimeSpan object. I also store the total time listened while taking my listening speed into account in this list. I listen to podcasts at 1.8x speed, so I was able to get the proper value like so:

var fastTimeListened = TimeSpan.FromTicks((long)(totalTimeListened.Ticks / 1.8));The TotalTimeListened object goes to millisecond specificity.

public class TotalTimeListened{ public int Days { get; set; } public int Hours { get; set; } public int Minutes { get; set; } public int Seconds { get; set; } public int Milliseconds { get; set; }}Top Ten Podcasts Per Year

To get this data, I loop through all episodes listened to for each year and store that data in a PodcastTime object. This stores some info about the podcast itself as well as the total time listened to (with and without the 1.8x speed boost).

This is per year, so after the loop I end up with four topTen{yyyy}.csv files.

public class PodcastTime{ public int Id { get; set; } public string Name { get; set; } public string ImageFileName { get; set; } public string Homepage { get; set; } public long Ticks { get; set; } public int Days { get; set; } public int Hours { get; set; } public int Minutes { get; set; } public int Seconds { get; set; } public int Milliseconds { get; set; } public long FastTicks { get; set; } public int FastDays { get; set; } public int FastHours { get; set; } public int FastMinutes { get; set; } public int FastSeconds { get; set; } public int FastMilliseconds { get; set; }}As all of these files are being created, they are being added to a List<CsvFileUpload> to queue for upload at the end of this block.

Purge the Bunny CDN Cache

Finally! After all of that, we are at our last step: purging the Bunny CDN cache. I’ve configured a Bunny CDN Pull Zone on top of my Azure Blob Storage instance, and since we just finished updating all of the files in use by the static site, we need to tell Bunny to update those files in their cache. We do so by calling their REST API purge cache post by tag endpoint using the RestSharp library.

// finally, purge the Bunny cache so the it re-downloads the CSV// https://docs.bunny.net/reference/pullzonepublic_purgecachepostbytagprofiler.Step("Bunny");log.LogInformation("Purging Bunny cache");var bunnyApiKey = await new AppSettings().BunnyApiKey();var pullZoneId = await new AppSettings().BunnyPullZoneId(); var client = new RestClient($"https://api.bunny.net/pullzone/{pullZoneId.Value}/purgeCache"); var request = new RestRequest() .AddHeader("Content-Type", "application/json") .AddHeader("AccessKey", bunnyApiKey.Value) .AddParameter("application/json", "{\"CacheTag\":\"string\"}", ParameterType.RequestBody); var response = await client.PostAsync(request); if (!response.IsSuccessful){ log.LogInformation($"Error purging Bunny cache: {response.StatusCode}");} log.LogInformation("Success!"); await profiler.StopAsync();log.LogInformation(profiler.RenderPlainText());The Static Site

Now that we’ve made it through the hardcore programming bits, I want to talk about the static site itself. It was important to me that the website I created be static - while it would theoretically be simpler to just throw the statistics into a database and use a dynamic web app that pulls from there, the hosting for both the site and the database would be more complex (and potentially expensive!). I also enjoy the process of building static websites, whether it’s using a static site generator or just plain old HTML/CSS/JS, and on top of that, I wanted to use this project as an excuse to get back into TailwindCSS.

I had a few ideas on how I could update a static website using a scheduled task, but I ended up trying two of them:

- Using a library to commit files to the GitHub repository directly

- Pulling the data from CSV files on a remote server (in this case, Azure Blob Storage)

As you saw above, I went with option 2.

Hosting

The first step to getting a static site created, at least for me, is figuring out hosting. I used to use Netlify for my static sites, but moved away from them after they updated their starter plan pricing. Another option that I looked into was Cloudflare Pages, but after recent events, I decided to stick with good old GitHub Pages. GitHub Pages is free and integrates really well with GitHub Actions to get automated CI/CD for your site.

The Domain

I’ve previously used Namecheap for all of my hosting needs, but after finding out about Porkbun it wasn’t long before I transferred all of my domains over there. Namecheap is perfectly fine, but something about Porkbun makes it fun to use. Plus, their customer service is absolutely top-notch.

I decided to use a subdomain for this project rather than a directory in my main site for a couple reasons:

- I wanted to keep the code for the podcast stats site in a completely separate repository

- I planned on using a different technology stack for the podcast stats site than I use for my personal site

Figuring out how to setup the subdomain with GitHub Pages was a bit of a pain, but here’s what I ended up with:

- A CNAME record for

podcaststatswith a value ofandrewpetz.github.io - In the podcaststats.andrewpetz.com GitHub repository, under Settings -> Pages, I set the custom domain to

podcaststats.andrewpetz.comand checked “enforce HTTPS”

GitHub Actions

As I mentioned above, GitHub Actions integrates really well with GitHub repos to get automated CI/CD for your site. After a lot of trial and error, this is what I ended up with for my workflow file:

name: Deploy site to Pages on: # Runs on pushes targeting the default branch push: branches: ["main"] # Allows you to run this workflow manually from the Actions tab workflow_dispatch: inputs: logLevel: description: "Log level" required: true default: "warning" # Sets permissions of the GITHUB_TOKEN to allow deployment to GitHub Pagespermissions: contents: read pages: write id-token: write # Allow one concurrent deploymentconcurrency: group: "pages" cancel-in-progress: true jobs: # Build job build: runs-on: ubuntu-latest defaults: run: working-directory: ./PodcastStats/PodcastStats.Site steps: - name: Checkout uses: actions/checkout@v3 - name: NPM or Yarn install with caching with: # Option to enable or disable use of a lock file (package-lock.json/yarn.lock) useLockFile: true working-directory: ./PodcastStats/PodcastStats.Site - name: Setup Pages id: pages uses: actions/configure-pages@v2 - name: Build site run: npm run build - name: Upload artifact uses: actions/upload-pages-artifact@v1 with: path: ./PodcastStats/PodcastStats.Site/_src # Deployment job deploy: environment: name: github-pages url: $ runs-on: ubuntu-latest needs: build steps: - name: Deploy to GitHub Pages id: deployment uses: actions/deploy-pages@v1Whew, that’s a lot. Let’s go through this in more detail.

Setup

name: Deploy site to Pages on: # Runs on pushes targeting the default branch push: branches: ["main"] # Allows you to run this workflow manually from the Actions tab workflow_dispatch: inputs: logLevel: description: "Log level" required: true default: "warning" # Sets permissions of the GITHUB_TOKEN to allow deployment to GitHub Pagespermissions: contents: read pages: write id-token: write # Allow one concurrent deploymentconcurrency: group: "pages" cancel-in-progress: trueThis block is the setup of the workflow. It sets the name of the action, tells it to run when there is a push to the main branch, adds a button to the Actions tab to manually run this action, sets the permissions to allow the workflow to deploy to GitHub Pages, and allows one concurrent deployment. That means it will automatically stop any in-progress runs and start over if I push multiple times before it has a chance to finish.

Jobs

There are two jobs defined in the workflow file: build and deploy. The workflow runs these one after the other.

jobs: # Build job build: runs-on: ubuntu-latest defaults: run: working-directory: ./PodcastStats/PodcastStats.Site steps: - name: Checkout uses: actions/checkout@v3 - name: NPM or Yarn install with caching with: # Option to enable or disable use of a lock file (package-lock.json/yarn.lock) useLockFile: true working-directory: ./PodcastStats/PodcastStats.Site - name: Setup Pages id: pages uses: actions/configure-pages@v2 - name: Build site run: npm run build - name: Upload artifact uses: actions/upload-pages-artifact@v1 with: path: ./PodcastStats/PodcastStats.Site/_src # Deployment job deploy: environment: name: github-pages url: $ runs-on: ubuntu-latest needs: build steps: - name: Deploy to GitHub Pages id: deployment uses: actions/deploy-pages@v1So how do these work? The build job is set to run on the latest LTS (long-term support) version of Ubuntu. I manually set the working directory to ./PodcastStats/PodcastStats.Site because this repo contains multiple projects, with the static site located within that folder. Then within the build job there are multiple steps: checkout, NPM install, setup pages, build site, and upload artifact.

Checkout

As you can see, this job is only one line: uses: actions/checkout@v3. One of the great features of GitHub Actions is the ability to use actions defined by others. In this case, it’s the default git checkout action written by GitHub itself. In their words, “this action checks-out your repository under $GITHUB_WORKSPACE, so your workflow can access it.”

NPM install

Using the npm-install action by bahmutov, with a working directory of ./PodcastStats/PodcastStats.Site, this job runs npm install. The neat part of the action we’re using is that it uses caching by default, which greatly speeds up the install process after the first run. I also specify useLockFile as true, even though that’s the default, because I was having issues with some of my NPM packages if I didn’t.

Setup pages

Uses the configure-pages action to… configure GitHub Pages for publish. See this page for more details about what it actually does.

Build site

Runs the command npm run build. I have that setup using a package.json script to run the command npx tailwindcss -i _src/_sass/styles.css -o _src/styles/app.css --minify, which builds our minified CSS file with TailwindCSS.

Upload artifact

Uses the default upload-pages-artifact to upload the ./PodcastStats/PodcastStats.Site/_src folder. This uploaded artifact is then available for the deploy job. Speaking of which…

Now that the build job is complete, the action will run the deploy job. This consists of one step with various configurations attached to it. In this case, we set the configurations based on the deploy-pages action defaults.

And voilà! Our static site has been deployed to GitHub Pages. Now that that’s setup, let’s take a look at the site itself.

Writing Our Static Site

My vision for the site was similar to the site that inspired this project - show some fun charts and/or numbers that describe my podcast listening history. To that end, I decided to use Chart.js to create the charts using JavaScript. For the structure of the site, I use the static site generator Jekyll with TailwindCSS for my styling. I chose these because it’s a stack I’ve used fairly extensively in the past, and I enjoy writing Jekyll/Tailwind code. I’m planning on writing a post in the future outlining how I set these up, so I won’t go into that here.

The general idea of using Chart.js for this is to read the CSV into a JavaScript object via the CDN URL with D3.js, then use that object to fill the HTML elements for displaying that data. Here’s what the code looks like for the total time listened text at the top of the page (which doesn’t actually use Chart.js - I’ll get to that next):

const blobUrl = "https://cdn.andrewpetz.com/podcaststats/"; var timeListened = d3.csv(blobUrl + "totalTimeListened.csv", function (d) { return { days: d.Days, hours: d.Hours, minutes: d.Minutes, seconds: d.Seconds, ms: d.Milliseconds };});timeListened.then(function (result) { var total = result[0]; $("#txtDays").text(total.days); $("#txtHours").text(total.hours); $("#txtMinutes").text(total.minutes); $("#txtSeconds").text(total.seconds); $("#txtMilliseconds").text(total.ms);});All of this occurs on $("document").ready(). The HTML structure looks like this:

<p>Total time listened</p><dl> <div> <dt>Days</dt> <dd id="txtDays"></dd> </div> <div> <dt>Hours</dt> <dd id="txtHours"></dd> </div> <div> <dt>Minutes</dt> <dd id="txtMinutes"></dd> </div> <div> <dt>Seconds</dt> <dd id="txtSeconds"></dd> </div> <div> <dt>Milliseconds</dt> <dd id="txtMilliseconds"></dd> </div></dl>Notice that I removed the classes. The unfortunate effect of using Tailwind is having fairly unwieldy classes in your HTML, which would make it difficult to read here.

Now here’s an example that uses Chart.js: hours listened by month. This is a bar chart where the x-axis is months and the y-axis is number of hours listened. It also shows the total hours listened, the number of episodes, and the hours per episode when hovering over an individual bar.

d3.csv(blobUrl + "monthlyStats.csv").then(loadMonthlyStats); function loadMonthlyStats(data) { const footer = (tooltipItems) => { var hoursPerEpisode = 0; var tooltipItem = tooltipItems[0]; var e = episodes[tooltipItem.parsed.x]; hoursPerEpisode = hours[tooltipItem.parsed.x] / e; return "Episodes: " + e + " | Hours per episode: " + hoursPerEpisode.toFixed(2); }; var chartLabels = data.map(function (d) { return d.Name }); var hours = data.map(function (d) { return d.Hours }); var episodes = data.map(function (d) { return d.Episodes }); const ctx = $("#chrtMonthlyStats"); const myChart = new Chart(ctx, { type: "bar", data: { labels: chartLabels, datasets: [{ label: "Hours listened", data: hours, backgroundColor: [ "rgba(255, 99, 132, 0.7)", "rgba(54, 162, 235, 0.7)", "rgba(255, 206, 86, 0.7)", "rgba(75, 192, 192, 0.7)", "rgba(153, 102, 255, 0.7)", "rgba(255, 159, 64, 0.7)" ], borderColor: [ "rgba(255, 99, 132, 1)", "rgba(54, 162, 235, 1)", "rgba(255, 206, 86, 1)", "rgba(75, 192, 192, 1)", "rgba(153, 102, 255, 1)", "rgba(255, 159, 64, 1)" ], borderWidth: 1 }] }, options: { scales: { y: { beginAtZero: true, grid: { display: true, color: "rgba(229, 231, 235, 0.1)" } }, x: { grid: { display: true, color: "rgba(229, 231, 235, 0.1)" } } }, plugins: { legend: { display: false }, tooltip: { callbacks: { footer: footer, } } } } });}The footer object here is what displays when hovering over a bar. chartLabels, hours, and episodes correspond to data that is displayed on the chart itself. Finally, myChart is the chart object that Chart.js uses to generate the <canvas> element to display the data. Here is where we set the background color, border color and width of the bars, as well as other options like the x and y scales and whether to display a legend. For more info check out the Chart.js configuration documentation.

Conclusion

This post turned out much longer and took a lot more time than I originally anticipated. The project itself has been one of the most rewarding that I’ve worked on. It feels great to start something and actually finish it, rather than being relegated to the ever-growing pile of abandoned ideas.

If there’s anything else you think I should include in this article, or any questions you have, please feel free to use the comment box below or send me an email at andrewpetz at proton.me. Thanks for reading!